There’s a joke in Silicon Valley about how AI was developed: Privileged coders were building machine learning algorithms to replace their own doting parents with apps that deliver their meals, drive them to work, automate their shopping, manage their schedules, and tuck them in at bedtime.

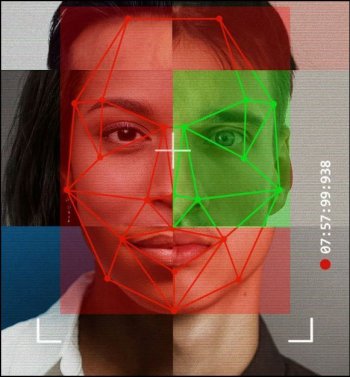

As whimsical as that may sound, AI-driven services often target a demographic that mirrors its creators: white, male workers with little time and more disposable income than they know what to do with. “People living in very different circumstances have very different needs and wants that may or may not be helped by this technology,” says Kanta Dihal at the University of Cambridge’s Leverhulme Centre for the Future of Intelligence in England. She is an expert in an emerging effort to decolonize AI by promoting an intersectional definition of intelligent machines that is created for and relevant to a diverse population. Such a shift requires not only diversifying Silicon Valley, but the understanding of AI’s potential, who it stands to help, and how people want to be helped.

Biased algorithms have made headlines in many industries. Apple Health failed to include a menstrual cycle tracking feature until 2015. Leaders at Amazon, where automation reigns supreme, scrapped efforts to harness AI to sift through resumes in 2018 after discovering their algorithm favored men, downvoting resumes with all-female colleges or mentions of women’s sports teams. In 2019, researchers discovered that a popular algorithm used to determine whether medical care was required was racially biased, recommending less care for Black patients than equally ill white patients because it calculated patient risk scores based on individuals’ annual medical costs. Because Black patients had, on average, less access to medical care, their costs were artificially low, and the algorithm faultily associated Blackness with less need for medical treatment.

Industries like banking have been more successful than others in implementing equitable AI (think: creating new standards for the use of AI and developing systems of internal checks and balances against bias), according to Anjana Susarla, the Omura-Saxena Professor in Responsible AI at Michigan State University. Health care and criminal justice, where algorithms have shown racial bias in predicting recidivism, are more troubling examples. Susarla says decolonizing AI will require the collective oversight of boards of directors, legislators, and regulators, not to mention those who implement and monitor the day-to-day outcomes of AI in every industry—not just the techies behind the tools.

“You have to have some separation between the person who created the AI and the person who can take some responsibility for the consequences that will arise when they are implementing it,” Susarla says.

Continue reading: https://neo.life/2022/06/what-will-it-take-to-decolonize-artificial-intelligence/

As whimsical as that may sound, AI-driven services often target a demographic that mirrors its creators: white, male workers with little time and more disposable income than they know what to do with. “People living in very different circumstances have very different needs and wants that may or may not be helped by this technology,” says Kanta Dihal at the University of Cambridge’s Leverhulme Centre for the Future of Intelligence in England. She is an expert in an emerging effort to decolonize AI by promoting an intersectional definition of intelligent machines that is created for and relevant to a diverse population. Such a shift requires not only diversifying Silicon Valley, but the understanding of AI’s potential, who it stands to help, and how people want to be helped.

Biased algorithms have made headlines in many industries. Apple Health failed to include a menstrual cycle tracking feature until 2015. Leaders at Amazon, where automation reigns supreme, scrapped efforts to harness AI to sift through resumes in 2018 after discovering their algorithm favored men, downvoting resumes with all-female colleges or mentions of women’s sports teams. In 2019, researchers discovered that a popular algorithm used to determine whether medical care was required was racially biased, recommending less care for Black patients than equally ill white patients because it calculated patient risk scores based on individuals’ annual medical costs. Because Black patients had, on average, less access to medical care, their costs were artificially low, and the algorithm faultily associated Blackness with less need for medical treatment.

Industries like banking have been more successful than others in implementing equitable AI (think: creating new standards for the use of AI and developing systems of internal checks and balances against bias), according to Anjana Susarla, the Omura-Saxena Professor in Responsible AI at Michigan State University. Health care and criminal justice, where algorithms have shown racial bias in predicting recidivism, are more troubling examples. Susarla says decolonizing AI will require the collective oversight of boards of directors, legislators, and regulators, not to mention those who implement and monitor the day-to-day outcomes of AI in every industry—not just the techies behind the tools.

“You have to have some separation between the person who created the AI and the person who can take some responsibility for the consequences that will arise when they are implementing it,” Susarla says.

Continue reading: https://neo.life/2022/06/what-will-it-take-to-decolonize-artificial-intelligence/